CCI 2.1. Organism and Artifact

Behavior, Purpose, and Teleology

The CoEvolution Quarterly for Summer 1976 published a long interview led by Stewart Brand in conversation with two major figures in the wider history of cybernetics, Gregory Bateson and Margaret Mead.[1] A married couple throughout the founding years of cybernetics, the cultural anthropologists Bateson and Mead were also mutual attendees at the fabled Macy Conferences presided over by the neuroscientist Warren McCulloch. Running from 1946 to 1953, this series began under the lucid title “Circular Causal and Feedback Mechanisms in Biological and Social Systems.” In 1948, Macy attendee Norbert Wiener published his field-defining work Cybernetics or Control and Communication in the Animal and the Machine.[2]

As Brand guides the conversation back to Bateson’s and Mead’s recollections of these events, the discussion turns to the importance of a foundational paper. This article is now famous as, in Margaret Mead’s portrayal during the CoEvolution Quarterly interview, “the first great paper on cybernetics.” Bateson appears to cite the reference in detail off the top of his head: “Rosenbleuth, Wiener and Bigelow. ‘Behavior, Purpose and Teleology,’ Philosophy of Science, 1943.” Let us open this paper up to see how these authors align “the animal and the machine” some years before Wiener’s definitive treatment in Cybernetics.

The text begins: “This essay has two goals. The first is to define the behavioristic study of natural events and to classify behavior. The second is to stress the importance of the concept of purpose.”[3] Indeed, the essay will conclude by insisting on a crucial distinction between the concept of purpose and the concept of cause. Now, “behaviorist” schools of animal study and human psychology had already been developed in the 1920s and 1930s, famously in B. F. Skinner’s 1938 work on operant conditioning The Behavior of Organisms. The methodological innovation in “Behavior, Purpose, and Teleology,” starting from the behavioral study of organisms, is to extend that approach to the analysis of machine behaviors as “natural events” in their own right. As later announced in the subtitle of Wiener’s Cybernetics, here “the animal and the machine” will be treated on the same conceptual plane.

During the same conversation with Brand, Bateson remarked that this paper “didn’t record an experiment, it reported on the formal character of seeking mechanisms . . . self-corrective mechanisms such as missiles.” Cybernetics’ gestation during World War II in Wiener’s work perfecting guidance systems for Allied weaponry is already in evidence. Attention to the formal character of goal-seeking systems will be intrinsic to the cybernetic mode of observation. Moreover, this discourse about goal-directed behavior marks its own goals as a discourse: first, “to define the behavioristic study of natural events,” as opposed to the study of internal functions, and second, to derive from behavioristic considerations a scientifically precise “concept of purpose” applicable to systems whose modes of operation supersede Aristotle’s classical theses on causality.

Bateson describes this essay’s abiding accomplishment: “It was a solution to the problem of purpose. From Aristotle on, the final cause has always been the mystery.” Here is the matter of teleology that our authors propose to recuperate. Modern science famously rejected traditional teleological explanations as suffering a metaphysical hangover positing God, or His design decisions, as the “final cause” for the way things are or for what they become. The classical notion is that the telos of any particular being—its end, aim, or goal—determines its proper purpose and behavior: to act so as to arrive at the final state that God has preordained for it. But as God’s purposes can only be a mystery, this was reasonably considered to be no way to proceed toward a rational understanding of “natural events.”

“Behavior, Purpose, and Teleology” sets itself the task of redefining the concept of telos or goal in a natural, material, and secular manner fit for scientific investigation. The primary argument will be that “a uniform behavioristic analysis is applicable to both machines and living organisms, regardless of the complexity of the behavior.” Note that the emphasis here is on behavioral uniformity across system types: both organisms and artifacts may be said to behave so as to accomplish goals. However, while behavioral analysis closes the gap between organisms and artifacts, it does not imply that either may be reduced to the other.

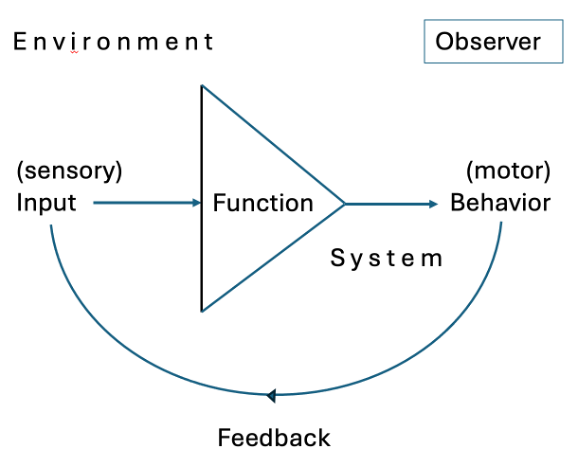

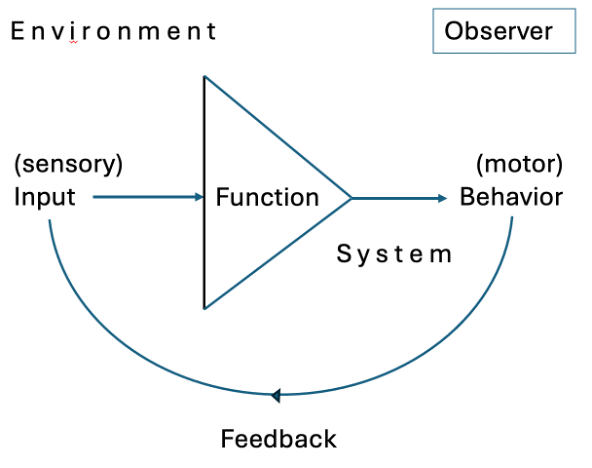

The logical train of this argument is founded on the distinction between behavior and function. The essay posits that the behavioristic study of “natural events” —that is, of the gamut of physical, biological, and artifactual objects and processes—observes a fundamental distinction between different modes of system operation. Given a system taken as an object of investigation, one can examine (1) how it works = its function, or (2) what it does = its behavior. Precision of observation is gained by adhering to this basic difference in the kinds of system operations.

—Input impinges on the system from its environment. Its reception modifies the system. The system reacts to that modification: it produces an output that modifies its environment. Behavioristic study starts by observing the manifest outputs of systems and then works back from there to inputs and functions.

—Function: The functions by which the system processes inputs and produces behaviors are internal to the system. As a result, how a thing works may not be readily observable. For instance, the corporeal functions of organisms are largely opaque or observable only in piecemeal, say, by means of elaborate devices such as CAT scans or scopes of various sorts.

Sidebar: In cybernetics, a system whose functions or interior workings are strictly unobservable is called a black box. In this case, one must speculate from its behavior—its responses to inputs—how it accomplishes its functioning. Here is Wiener’s definition from Cybernetics: “I shall understand by a black box a piece of apparatus, such as four-terminal networks with two input and two output terminals, which performs a definite operation on the present and past of the input potential, but for which we do not necessarily have any information of the structure by which this operation is performed” (2nd ed, xi).

—Behavior: The object of the behavioral study of natural events is the output manifested by the system. Due to the functioning of the system, its behaviors enter the environment. And relative to a system’s internal functions, its external behaviors—the things a thing does as a specifiable material object operating in the world—are generally observable.

—Function and Behavior. Sufficiently complex systems exhibit these two distinct, separate yet connected, modes of operation. Function and behavior call out different modes of observation. As codified in the mechanistic worldview of modern science, the traditional understanding is that to comprehend the functions of systems, one takes the object apart to see how it is put together. Cybernetics will offer holistic challenges to this reductionistic philosophy, pointing out that such mechanistic cause-effect approaches must fail in particular to bring about a comprehensive understanding of organic functions.

Let us now dive back into the detail of “Behavior, Purpose, and Teleology.” With the selection of behavior over function as the proper focus of scientific investigation into uniformities between organisms and artifacts, a distinction is now drawn between active and passive behaviors. This conceptual pair reintroduces the contextual distinction between environment and system as distinct sources of energy. Behavior takes energy: the question here is whether the immediate source of that energy resides within or without the system.

Active behavior is that in which the object is the source of the output energy involved in a given specific reaction. . . . In passive behavior . . . all the energy in the output can be traced to the immediate input.

For an example of passive behavior, the text suggests “the soaring flight of a bird.” Let us elaborate on this image. In contrast to such passive behavior, the bird’s beating its wings to launch into flight would be an active behavior, an action deriving from the bird’s internal functions of energy transformation. But once it gets high enough into the air, it can tap its environmental affordances—such as the kinetic and thermal energies of the wind—rather than its own resources, to keep itself aloft. Such behavior is passive simply in that the energy for flight now arrives from outside the system.[4]

Behavior has now been distinguished from function, and active behavior has been distinguished from passive behavior. The next distinction selects active behavior and parses it into purposeful active behavior versus purposeless (or random) active behavior.

The term purposeful is meant to denote that the act or behavior may be interpreted as directed to the attainment of a goal—i.e., to a final condition in which the behaving object reaches a definite correlation in time or in space with respect to another object or event.

Active behaviors are purposeful, according to this analysis, when they bring the system toward the fulfillment of a goal. Returning to our bird example, when its own actions launch it into flight, that active behavior is purposeful. But then, once aloft, it may just glide around in circles. This is the “soaring flight of a bird” that the essay interprets as an active yet purposeless, or random, behavior.[5] Say now that the bird conceives a desire to arrive at a favored spot. It changes its random soaring in circles into an ostensibly purposeful, goal-directed activity. It has now internally posited an external goal toward which it directs its active behavior. It beats its wings once more and directs its flight. Until that goal is attained, it has a purpose to pursue. The phrase now applied to this active mode of purposeful behavior is voluntary activity:

The basis of the concept of purpose is the awareness of "voluntary activity." Now, the purpose of voluntary acts is not a matter of arbitrary interpretation but a physiological fact. When we perform a voluntary action what we select voluntarily is a specific purpose, not a specific movement.

But check it out: the notion of a voluntary action implies the presence of an agent or agency that possesses some kind of will-to-action over which it has some control. But clearly these matters are not yet behaviors; they are closer to being functions. To bring the concept of purpose into focus, our authors have to shift from behavioral observations (say, of “movements”) to functional explanations. When active behaviors fulfill self-selected purposes, our authors posit, they may be considered voluntary acts. But at the same time, that these actions are performed implies the prior emergence of those same purposes, which then call out the production of these particular behaviors. The emergence of purpose itself as the internal function of an organism may well be a “physiological fact,” but it is a fact that is constructed by a behavioral deduction.

The contrast between function and behavior remains salient as the authors now, and without prior announcement, probe the question of purpose in relation to machines. They note that, even as designed, perfected, and handled by users with some goal in mind, not all machines are properly understood as purposeful in themselves. While carrying out the extrinsic goals of their users, many machines come to no final state, they just keep going or repeating their behavioral repertoires:

. . . a clock [is] designed, it is true, with a purpose, but [it has] a performance which, although orderly, is not purposeful—i.e., there is no specific final condition toward which the movement of the clock strives . . .

In contrast, the aim of a purposeful act can be compared to the effort to hit some sort of target by which event the purpose is fulfilled. And some machines are literally designed to behave in this fashion. Forged in the practical military milieu of designing smart weaponry, cybernetics gestates in Wiener’s contributions to the design of a mechanism capable of factoring environmental input into self-corrective targeting. Our authors are willing to talk about machines of this type in terms of purposeful behavior:

Some machines, on the other hand, are intrinsically purposeful. A torpedo with a target-seeking mechanism is an example. The term servomechanisms has been coined precisely to designate machines with intrinsic purposeful behavior.

A machine such as a torpedo is said to be purposeful insofar as “the attainment of a goal” as a “final condition” is intrinsic to its performance. This particular mechanized weapon has been augmented with an internal guidance system assisting its goal of destroying its target. But the larger category here—and the more fundamental object of the original cybernetics —is the servomechanism, artifacts of various sorts that function to manifest and/or maintain intrinsic, purposeful, goal-seeking behavior on the part of mechanical systems.

The original technological servomechanism most often cited was a kind of thermostat—the governor invented to regulate the energy output of steam engines.[6] Servomechanisms are mechanical control systems, and such automated control is now set forth as the cybernetic form of mechanical purpose. But how do such mechanisms function? In fact, as we are now about to learn, this happens by means of a formal operation called “feed-back.” And despite its application to a distinct mode of “purposeful active behavior,” the concept of feedback renders the distinction between function and behavior—between input, response, and output—indeterminate once more.

Purposeful active behavior may be subdivided into two classes: "feed-back" (or "teleological") and "non-feed-back" (or "non-teleological"). The expression feed-back . . . may denote that some of the output energy of an apparatus or machine is returned as input; an example is an electrical amplifier with feed-back. The feed-back is in these cases positive—the fraction of the output which reenters the object has the same sign as the original input signal. Positive feed-back adds to the input signals, it does not correct them. The term feed-back is also employed . . . to signify that the behavior of an object is controlled by the margin of error at which the object stands at a given time with reference to a relatively specific goal. The feed-back is then negative, that is, the signals from the goal are used to restrict outputs which would otherwise go beyond the goal.

This essay effectively introduces the concept of feedback to disciplines beyond the confines of control engineering. The semantic paradox is that “positive” feedback is usually undesirable: if unchecked, it leads to runaway, the deleterious cascading amplification of system behaviors: “Positive feed-back adds to the input signals, it does not correct them.”[7] Alternatively, “negative” feedback has a minus sign because it dampens—counteracts rather than augments—succeeding outputs. Control by systemic “self-regulation” emerges from the balancing out of positive input and negative feedback. Here, “the signals from the goal are used to restrict outputs which would otherwise go beyond the goal.”

We come back now to cybernetic teleology in that in these instances, the goal—the telos—is not the back-propagation of some metaphysical archetype into worldly instantiation but the completion of some purposeful effort to make contact with a desired object or to maintain a desirable state. We are now given a strikingly absolute but also concrete description of purposeful behavior:

All purposeful behavior may be considered to require negative feed-back. If a goal is to be attained, some signals from the goal are necessary at some time to direct the behavior. . . . the behavior of some machines and some reactions of living organisms involve a continuous feed-back from the goal that modifies and guides the behaving object.

For instance, if the purpose is literally to hit a moving target, then the goal is to intercept and make some sort of contact with an object in motion. “Signals from the goal” could simply be sensory registration of the light which the targeted object emits or reflects, or of the sound waves its motion generates, as these encode the present state of the purposeful system as returning, revised environmental input for the self-correction of succeeding behaviors. Then again, as an organic function within animal bodies, proprioception, or “self-feeling,” is distributed throughout nervous systems, providing continuous feedback on efforts to direct motor behaviors toward their purposes, such as balanced locomotion or effective manipulation. For another instance, a “cerebellar patient” suffers a brain pathology that disrupts negative feedback regarding their voluntary motions, yielding “disorderly motor performances.” Additionally, negative feedback can assist purposeful behaviors that anticipate the target’s actions: “A cat starting to pursue a running mouse does not run directly toward the region where the mouse is at any given time, but moves toward an extrapolated future position.” This is possible, once again, because the cat can receive “signals from the goal” that its feline nervous system can use to continuously correct its own trajectory.

Rosenbleuth, Wiener, and Bigelow recuperate and redefine teleology by substituting the concepts of purpose and behavior for cause and effect, especially as mapped onto the distinction between behavior and function in relation to systemic objects:

We have restricted the connotation of teleological behavior by applying this designation only to purposeful reactions which are controlled by the error of the reaction—i.e., by the difference between the state of the behaving object at any time and the final state interpreted as the purpose. Teleological behavior thus becomes synonymous with behavior controlled by negative feed-back . . . . [In contrast,] causality implies a one-way, relatively irreversible functional relationship, whereas teleology is concerned with behavior, not with functional relationships.

On the one hand, as given in classical mechanistic logic, causes have no depth; they are physical events that make no cognitive demands; they just occur and proceed to their effects—configurations of atoms bouncing around, to some extent in determinable ways. On the other hand, the proto-cybernetic logic of purpose is grounded here in voluntary organic behaviors alongside the functioning of animal metabolisms and nervous systems. There are machines—from servomechanical apparatuses to computational systems—that can be observed to behave in similar fashion. But the distinction of function remains: “While the behavioristic analysis of machines and living organisms is largely uniform, their functional study reveals deep differences.” And this is a difference that makes a difference.

Proverbial causes are posited to explain how things work. Cybernetic purposes are posited to explain why systems guide their functioning toward particular goals. The classical analysis of causes made provision for neither the recognition of systems as such nor for their constitutive feedback processes. Instead, it codified a linear relation between causes and effects. One sees this classical scheme still inhabiting the mechanistic dynamics of an earlier physics, in which all causality is reduced to the transfer of forces among solid atoms in motion—the “billiard ball” scenario that underwrites prior fables of a deterministic world. In sum, the contribution of “Behavior, Purpose, and Teleology” is to complicate if not overcome the classical linearity binding causes and effects and put in its place a cybernetic circularity of purposes and behaviors adequate to provide a formal template for living systems.

Organic cybernetics develops out of and alongside these primary systemic uniformities as well as distinctions between organisms and artifacts. In organisms, purpose-behavior relations rise to their own orders of complexity beyond the generally more straightforward cause-effect relations traceable in machines. The abiding innovation here is that purpose-behavior relations of whatever sort produce regulatory dynamics when they are circular, that is, when they operate by means of feedback loops that cycle a message about outputs back to inputs, directing further functions to produce further behaviors converging on set points or voluntary goals. In short, organic cybernetics is set to comprehend (1) observational attention to system behaviors, (2) cognitive research into corporeal and agential functions, and (3) contextual reflections on larger natural ensembles from organizations to ecosystems taken to Gaia’s planetary limit. In the aggregate, its thinkers—including Norbert Wiener—have built up a complex counter-discourse to mainstream control theory and machine computation.

[1] Stewart Brand, “For God’s Sake, Margaret: Conversation with Gregory Bateson and Margaret Mead,” CoEvolution Quarterly 10 (Summer 1976), 32-44.

[2] Norbert Wiener, Cybernetics or Control and Communication in the Animal and the Machine, 2nd ed. (1948; Cambridge, MA: MIT Press, 1961). On Heinz von Foerster’s recommendation, the remaining meetings continued simply as the Macy Conferences on Cybernetics.

[3] Arturo Rosenbleuth, Norbert Wiener, and Julian Bigelow, “Behavior, Purpose, and Teleology,” Philosophy of Science 10:1 (January 1943): 18-24.

[4] A proto-cybernetic attention to control complicates this scenario: the bird can still deftly control its body and thus affect the disposition of its flight. And yet control must emerge from within this organism as a function of its corporeal competence. While we can observe the soaring behavior, the subtle internal operations of the bird’s sensory and nervous functions remain something of a mystery.

[5] Or again—although this theme does not appear in this article—we could observe it as play. There is no aim to get somewhere, there is just, it would seem, the pleasure of riding the wind. But isn’t achieving corporeal pleasure itself a kind of self-referential purpose? We can come back to this.

[6] Our word governor and Wiener’s neologism cybernetics both derive from kubernetes, the Greek term for steersman. Wiener, Cybernetics, 2nd ed, p.11.

[7] See Anthony Chaney, Runaway: Gregory Bateson, the Double Bind, and the Rise of Ecological Consciousness (Chapel Hill: University of North Carolina Press, 2017).